Learning objectives

- Know the basics of how a function works

- Understand how to read (import) data into R

- Explore data within R using

View() - Understand how to write (export) data from R

- Understand how to read and write different data formats (e.g., excel, csv, shp, rds, dbf, access)

What’s a function?

Functions are how work gets done in R, and before we

jump into reading and writing data, we need to know how functions work

because we will use functions to perform these tasks.

A function takes any number of arguments and, performs some transformations, and returns an output.

For example, the R function sum() takes any

number of numeric arguments and adds them together (recall you can view

the documentation for sum() by entering ?sum).

Let’s add 1 and 2 like so:

sum(1, 2)

[1] 3In R, we can create sequences easily. If we wanted to

create a sequence of numbers from 1 to 10, we can use the function

seq(), which takes 3 arguments: from (start

value), to (end value), and by (increment of

the sequence).

seq(0, 10, 1)

[1] 0 1 2 3 4 5 6 7 8 9 10Convince yourself that creating sequences of arbitrary length is possible. Can you create a sequence from 0 to 1 million by an increment of 500?

Because creating sequences incremented by 1 are so common, there’s a

special shorthand for these sequences, 1:10. Let’s take the

sum of the sequence from 1 to 10 by providing it as an argument to the

function sum():

sum(1:10)

[1] 55sum() and seq() are two of many functions

you’ll encounter in R. Like all functions, they take inputs

(arguments) and return an output. To take advantage of functions, we

need to apply them to our data. Let’s now use import and export

functions in R to explore some water resources data.

Read (import) data

Data come in many formats, and R has utilities for

reading and writing all kinds of data. In this lesson, we’ll explore

some of the most common data formats you’ll encounter in the wild, and

the functions used to import these data.

csv (comma separated values)

The comma separated value, or .csv, is a simple and

effective way to store tabular data. To read a csv file, we first import

the {readr} library, which contains the function

read_csv(). Let’s read a file from our

data/gwl folder that contains station information for

groundwater level monitoring sites in California. You can also type

“data/” and press Tab with your cursor just after the

“/” to view all files in that path.

library(readr)

# read the "stations" csv, save it as an object called "stations", and print the object

stations <- read_csv("data/gwl/stations.csv")

head(stations)

# A tibble: 6 × 15

STN_ID SITE_CODE SWN WELL_NAME LATITUDE LONGITUDE WLM_METHOD

<dbl> <chr> <chr> <chr> <dbl> <dbl> <chr>

1 51445 320000N1140000… <NA> Bay Ridge 35.6 -122. USGS quad

2 25067 325450N1171061… 19S0… <NA> 32.5 -117. Unknown

3 25068 325450N1171061… 19S0… <NA> 32.5 -117. Unknown

4 39833 325450N1171061… 19S0… <NA> 32.5 -117. Unknown

5 25069 325450N1171061… 19S0… <NA> 32.5 -117. Unknown

6 38479 325450N1171061… 19S0… <NA> 32.5 -117. Unknown

# … with 8 more variables: WLM_ACC <chr>, BASIN_CODE <chr>,

# BASIN_NAME <chr>, COUNTY_NAME <chr>, WELL_DEPTH <dbl>,

# WELL_USE <chr>, WELL_TYPE <chr>, WCR_NO <chr>You can also pass a URL to read_csv().

# read the "stations" csv from the Github URL

stations <- read_csv("https://github.com/r4wrds/r4wrds/blob/main/intro/data/gwl/stations.csv?raw=true")

R tells us upon import that this data has 43,807 rows and 15 columns.

We can verify this with the nrow() and ncol()

functions, and the dim() function:

Whenever we see rectangular data like this in R, it’s probably a

data.frame object, but just to check, we can always ask R

to tell us what the class of the object is:

class(stations)

[1] "spec_tbl_df" "tbl_df" "tbl" "data.frame" The printed output shows us the first few rows of the stations data

we just read, but if we wanted to dig a bit deeper and see more than 10

rows and 7 columns of data, we can use the function View(),

which in RStudio opens a data viewer.

View(stations)

Within the viewer, we can search the data.frame, sort rows, and scroll through the data to inspect it.

A data.frame is made of many vectors of the same length. We can

access a column using the $ operator, and subset the vector

with bracket notation [. To access the first row in the

WELL_TYPE column:

stations$WELL_TYPE[1]

[1] "Part of a nested/multi-completion well"If we wanted the WELL_TYPE entries 1 through 10, we can

subset by a vector of the sequence from 1 through 10:

stations$WELL_TYPE[1:10]

[1] "Part of a nested/multi-completion well"

[2] "Unknown"

[3] "Unknown"

[4] "Unknown"

[5] "Unknown"

[6] "Unknown"

[7] "Unknown"

[8] "Part of a nested/multi-completion well"

[9] "Unknown"

[10] "Unknown" Sometimes it’s helpful to count unique variables in a column, especially for categorical data such as the well type.

table(stations$WELL_TYPE)

Part of a nested/multi-completion well

1778

Single Well

12053

Unknown

29976 xlsx and xls (Excel files)

Excel files are very common, and R has great utilities for reading in and processing excel files. Calenviroscreen data comes in excel format, which we can read in like so:

library(readxl)

ces <- read_xlsx("data/calenviroscreen/ces3results.xlsx")

head(ces, 10) # print the first 10 rows

# A tibble: 10 × 57

`Census Tract` `Total Population` `California County` ZIP

<dbl> <dbl> <chr> <dbl>

1 6019001100 3174 Fresno 93706

2 6071001600 6133 San Bernardino 91761

3 6019000200 3167 Fresno 93706

4 6077000801 6692 San Joaquin 95203

5 6019001500 2206 Fresno 93725

6 6037204920 2598 Los Angeles 90023

7 6077000300 2396 San Joaquin 95203

8 6019001000 4106 Fresno 93706

9 6037206050 2146 Los Angeles 90023

10 6019000400 6343 Fresno 93721

# … with 53 more variables:

# `Nearby City \r\n(to help approximate location only)` <chr>,

# Longitude <dbl>, Latitude <dbl>, `CES 3.0 Score` <dbl>,

# `CES 3.0 Percentile` <dbl>, `CES 3.0 \r\nPercentile Range` <chr>,

# `SB 535 Disadvantaged Community` <chr>, Ozone <dbl>,

# `Ozone Pctl` <dbl>, PM2.5 <dbl>, `PM2.5 Pctl` <dbl>,

# `Diesel PM` <dbl>, `Diesel PM Pctl` <dbl>, …By default, read_xlsx() reads in the first sheet.

However, there may be many sheets in an excel file. If we want to read

in a different sheet, we can tell R which sheet to read in, and even how

many lines to skip before reading in data.

metadata <- read_xlsx("data/calenviroscreen/ces3results.xlsx",

sheet = 2,

skip = 6)

metadata

# A tibble: 66 × 3

`Variable Name` Description `CalEnviroScre…`

<chr> <chr> <chr>

1 "Census Tract" Census Tra… <NA>

2 "Total Population" 2010 popul… <NA>

3 "California County" California… <NA>

4 "ZIP" Postal ZIP… <NA>

5 "Nearby City \r\n(to help approximate… City or ne… <NA>

6 "Longitude" Longitude … <NA>

7 "Latitude" Latitude o… <NA>

8 "CES 3.0 Score" CalEnviroS… <NA>

9 "CES 3.0 Percentile" Percentile… <NA>

10 "CES 3.0 Percentile Range" Percentile… <NA>

# … with 56 more rowsChallenge 1

- open the documentation for

read_xlsx()using?(Hint: type?read_xlsxin the console and hit enter) - read through the Arguments to get a sense of what else the function can do

- Read in the “Index Summary” sheet of

"data/healthy_watersheds/CA_PHWA_TabularResults_170518.xlsx", and select the appropriate number of rows to skip. - What happened to column names during the read?

Click for Answers!

health <- read_xlsx("data/healthy_watersheds/CA_PHWA_TabularResults_170518.xlsx",

sheet = 2,

skip = 4)

head(health)

# A tibble: 6 × 14

`Watershed Name` HUC12 ECOREGION STATE Score...5 Percentile...6

<chr> <chr> <dbl> <chr> <dbl> <dbl>

1 Red Spring-Colorado … 1503… 14 CA 0.866 57.2

2 Shadow Canyon 1503… 14 CA 0.861 55.0

3 Eagle Pass 1503… 14 CA 0.844 49.4

4 Mohave Valley-Colora… 1503… 14 CA 0.811 42.4

5 Monumental Pass 1503… 14 CA 0.879 63.2

6 Lobecks Pass 1503… 14 CA 0.855 53.1

# … with 8 more variables: Score...7 <dbl>, Percentile...8 <dbl>,

# `Top 10%` <chr>, `Top 25%` <chr>, Score...11 <dbl>,

# Percentile...12 <dbl>, Score...13 <dbl>, Percentile...14 <dbl>Some column names during the read were renamed because they’re the same in the Excel sheet. In R, a data.frame can have only one unique name per column – duplicate names aren’t allowed! Thus, R renamed those duplicate names. In a later module, we will see how to rename columns within R.

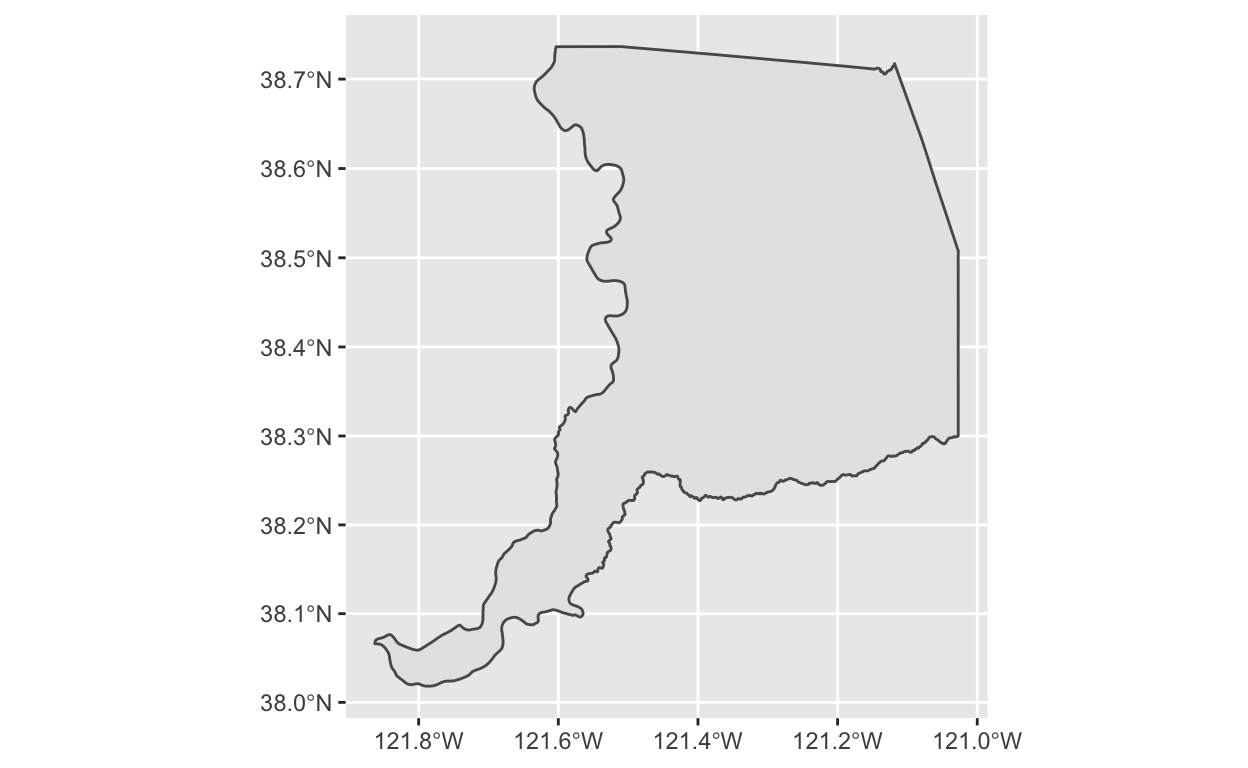

shp (ArcGIS shapefiles)

Geospatial data is ubiquitous. So is the ArcGIS data format, the

shapefile. A georeferenced shapefile is, at minimum made of 4 files:

.shp, .prj, .dbf, and

.shx.

# unzip Sacramento county shapefile

unzip("data/shp/sac_county.zip", exdir = "data/shp/sac")

# read the shapefile

library(sf)

sac_county <- st_read("data/shp/sac/sac_county.shp")

Reading layer `sac_county' from data source

`/Users/richpauloo/Documents/GitHub/r4wrds/intro/data/shp/sac/sac_county.shp'

using driver `ESRI Shapefile'

Simple feature collection with 1 feature and 9 fields

Geometry type: POLYGON

Dimension: XY

Bounding box: xmin: -13565710 ymin: 4582007 xmax: -13472670 ymax: 4683976

Projected CRS: WGS 84 / Pseudo-Mercatordbf

.dbf files are one kind of database file. If you’ve ever

opened a shapefile with attribute information, you’ve used a

.dbf file. The foreign package allows us to

read .dbf files into R. Since this is a new package, we

need to install it with install.packages("foreign").

We’ve been loading entire packages with the library()

function, but you can also call a function from a package without

loading it by using

<package_name>::<function_name> syntax. Let’s

load the .dbf file from our Sacramento County polygon.

foreign::read.dbf("data/shp/sac/sac_county.dbf")

OBJECTID COUNTY_NAM COUNTY_ABB COUNTY_NUM COUNTY_COD

1 34 Sacramento County SAC 34 34

COUNTY_FIP Shape Shape.STAr Shape.STLe geometry

1 067 Polygon 4203503574.269531 406962.476293 <NA>rds and .rda

.rds and .rda (.rda is

shorthand for .RData) are a special R-based data formats

used to store R objects. These files can be read just like another other

import functions shown above. Let’s use it to import the groundwater

level station data we read in earlier. Note that a .rds

file can hold any single R object.

# A tibble: 6 × 15

STN_ID SITE_CODE SWN WELL_NAME LATITUDE LONGITUDE WLM_METHOD

<dbl> <chr> <chr> <chr> <dbl> <dbl> <chr>

1 51445 320000N1140000… <NA> Bay Ridge 35.6 -122. USGS quad

2 25067 325450N1171061… 19S0… <NA> 32.5 -117. Unknown

3 25068 325450N1171061… 19S0… <NA> 32.5 -117. Unknown

4 39833 325450N1171061… 19S0… <NA> 32.5 -117. Unknown

5 25069 325450N1171061… 19S0… <NA> 32.5 -117. Unknown

6 38479 325450N1171061… 19S0… <NA> 32.5 -117. Unknown

# … with 8 more variables: WLM_ACC <chr>, BASIN_CODE <chr>,

# BASIN_NAME <chr>, COUNTY_NAME <chr>, WELL_DEPTH <dbl>,

# WELL_USE <chr>, WELL_TYPE <chr>, WCR_NO <chr>Sometimes, you may create an intermediate result that is

time-consuming to recreate from scratch each time, and you want to save

that intermediate result to streamline future analyses. You can export,

or write this object to any number of data formats like a csv, SQL

database, or shapefile. Unlike these data formats however, data saved as

.rds are saved as one of R’s object classes, like

data.frame, vector, list, and so on. In practice, only R is used to read

.rds and .rda files, so these formats are

chosen when we expect to use R to read these data at a later time.

One quick difference between .rds and .rda

files, for .rds files we can only store a single R object

(of any kind). For an .rda file, we can store

many. .rda are also compressed by default.

There are pros and cons we will discuss later.

sqlite

SQLite is an open-source database format based on SQL that’s useful

for storing large datasets locally on your computer. The methods to

connect to a SQLite database, list tables, read tables, and send queries

are similar across other cloud databases you may encounter in the wild,

like Postgres, and enterprise

database systems like Microsoft Access. We use the {here}

package to construct relative paths in the RProject.

library(RSQLite)

library(here)

# location of an sqlite database

dbpath <- here("data/gwl/gwl_data.sqlite")

# actually connect to the database

dbcon <- dbConnect(dbDriver("SQLite"), dbpath)

# list all the tables in the database

dbListTables(dbcon)

[1] "measurements_sep" "perforations" "stations" # get one of the tables into a dataframe

head(dbReadTable(dbcon, "stations"))

STN_ID SITE_CODE SWN WELL_NAME LATITUDE

1 51445 320000N1140000W001 <NA> Bay Ridge 35.5604

2 25067 325450N1171061W001 19S02W05K003S <NA> 32.5450

3 25068 325450N1171061W002 19S02W05K004S <NA> 32.5450

4 39833 325450N1171061W003 19S02W05K005S <NA> 32.5450

5 25069 325450N1171061W004 19S02W05K006S <NA> 32.5450

6 38479 325450N1171061W005 19S02W05K007S <NA> 32.5450

LONGITUDE WLM_METHOD WLM_ACC BASIN_CODE BASIN_NAME

1 -121.755 USGS quad Unknown <NA> <NA>

2 -117.106 Unknown Unknown 9-033 Coastal Plain Of San Diego

3 -117.106 Unknown Unknown 9-033 Coastal Plain Of San Diego

4 -117.106 Unknown Unknown 9-033 Coastal Plain Of San Diego

5 -117.106 Unknown Unknown 9-033 Coastal Plain Of San Diego

6 -117.106 Unknown Unknown 9-033 Coastal Plain Of San Diego

COUNTY_NAME WELL_DEPTH WELL_USE

1 Monterey NA Residential

2 San Diego NA Unknown

3 San Diego NA Unknown

4 San Diego NA Unknown

5 San Diego NA Unknown

6 San Diego NA Unknown

WELL_TYPE WCR_NO

1 Part of a nested/multi-completion well <NA>

2 Unknown <NA>

3 Unknown <NA>

4 Unknown <NA>

5 Unknown <NA>

6 Unknown <NA>head(dbReadTable(dbcon, "measurements_sep"))

STN_ID SITE_CODE WLM_ID MSMT_DATE WLM_RPE WLM_GSE

1 4775 384931N1212618W001 1443624 2004-03-01 118.4 117.4

2 4775 384931N1212618W001 1443625 2003-10-01 118.4 117.4

3 4775 384931N1212618W001 1443622 2003-03-15 118.4 117.4

4 4775 384931N1212618W001 1443620 2002-10-01 118.4 117.4

5 4775 384931N1212618W001 1443621 2001-10-01 118.4 117.4

6 4775 384931N1212618W001 1443623 2001-03-15 118.4 117.4

RDNG_WS RDNG_RP WSE RPE_WSE GSE_WSE WLM_QA_DESC WLM_DESC

1 0 127.0 -8.6 127.0 126.0 <NA> Unknown

2 0 121.7 -3.3 121.7 120.7 <NA> Unknown

3 0 119.5 -1.1 119.5 118.5 <NA> Unknown

4 0 128.9 -10.5 128.9 127.9 <NA> Unknown

5 0 131.4 -13.0 131.4 130.4 <NA> Unknown

6 0 116.5 1.9 116.5 115.5 <NA> Unknown

WLM_ACC_DESC WLM_ORG_ID

1 Water level accuracy is unknown 1

2 Water level accuracy is unknown 1

3 Water level accuracy is unknown 1

4 Water level accuracy is unknown 1

5 Water level accuracy is unknown 1

6 Water level accuracy is unknown 1

WLM_ORG_NAME MSMT_CMT COOP_AGENCY_ORG_ID

1 Department of Water Resources <NA> 1074

2 Department of Water Resources <NA> 1074

3 Department of Water Resources <NA> 1074

4 Department of Water Resources <NA> 1074

5 Department of Water Resources <NA> 1074

6 Department of Water Resources <NA> 1074

COOP_ORG_NAME

1 SACRAMENTO MUNICIPAL UTILITY DISTRICT

2 SACRAMENTO MUNICIPAL UTILITY DISTRICT

3 SACRAMENTO MUNICIPAL UTILITY DISTRICT

4 SACRAMENTO MUNICIPAL UTILITY DISTRICT

5 SACRAMENTO MUNICIPAL UTILITY DISTRICT

6 SACRAMENTO MUNICIPAL UTILITY DISTRICT# pass a query to the database

dbGetQuery(dbcon, "SELECT * from measurements_sep WHERE STN_ID = 4775 LIMIT 5")

STN_ID SITE_CODE WLM_ID MSMT_DATE WLM_RPE WLM_GSE

1 4775 384931N1212618W001 1443624 2004-03-01 118.4 117.4

2 4775 384931N1212618W001 1443625 2003-10-01 118.4 117.4

3 4775 384931N1212618W001 1443622 2003-03-15 118.4 117.4

4 4775 384931N1212618W001 1443620 2002-10-01 118.4 117.4

5 4775 384931N1212618W001 1443621 2001-10-01 118.4 117.4

RDNG_WS RDNG_RP WSE RPE_WSE GSE_WSE WLM_QA_DESC WLM_DESC

1 0 127.0 -8.6 127.0 126.0 <NA> Unknown

2 0 121.7 -3.3 121.7 120.7 <NA> Unknown

3 0 119.5 -1.1 119.5 118.5 <NA> Unknown

4 0 128.9 -10.5 128.9 127.9 <NA> Unknown

5 0 131.4 -13.0 131.4 130.4 <NA> Unknown

WLM_ACC_DESC WLM_ORG_ID

1 Water level accuracy is unknown 1

2 Water level accuracy is unknown 1

3 Water level accuracy is unknown 1

4 Water level accuracy is unknown 1

5 Water level accuracy is unknown 1

WLM_ORG_NAME MSMT_CMT COOP_AGENCY_ORG_ID

1 Department of Water Resources <NA> 1074

2 Department of Water Resources <NA> 1074

3 Department of Water Resources <NA> 1074

4 Department of Water Resources <NA> 1074

5 Department of Water Resources <NA> 1074

COOP_ORG_NAME

1 SACRAMENTO MUNICIPAL UTILITY DISTRICT

2 SACRAMENTO MUNICIPAL UTILITY DISTRICT

3 SACRAMENTO MUNICIPAL UTILITY DISTRICT

4 SACRAMENTO MUNICIPAL UTILITY DISTRICT

5 SACRAMENTO MUNICIPAL UTILITY DISTRICTWrite (export) data

To write (export) data in R you need 2 things: data to write, and a

location and format to write the data. For example, if we wanted to

write our stations data to a csv in the “data_output”

folder, we would do the following:

# write "stations" to a file in the data_output folder called "my_stations.csv"

write_csv(stations, "data_output/my_stations.csv")

Now check that location and verify that your station data was written.

We can do the same for other files:

As before, navigate to these folders to verify these data were written. We can also check to see if these data exist from within R:

my_results <- list.files("data_output")

my_files <- c("sac_county.shp", "sac_county.rds")

# test if your files are in the data_output folder

my_files %in% my_results

# another handy function is `file.exists`, which tells you if your file exists

file.exists("data_output/sacramento_county.shp")

file.exists("data_output/sac_county.shp")

file.exists("data_output/sac_county.rds")

Challenge 2

- create an object called

breakfastand assign it a string with what you had for breakfast. - write that object to

breakfast.rdsfile in/data_output - verify this worked by reading the string back in and inspecting it

Click for Answers!

# create a string and write it to an rds file

breakfast <- "green eggs and ham"

# write_rds takes two arguments: the object to write and location to write it

write_rds(breakfast, "data_output/breakfast.rds")

# read the rds file back into R and save it as a variable

my_breakfast <- read_rds("data_output/breakfast.rds")

# use the `cat()` function (concatenate) to announce your breakfast

cat("Today for breakfast I ate", my_breakfast)

Previous

module:

3. Project management

Next

module:

5. Data visualization